Here is my guide on how to Join Ubuntu Workstation to a Windows Domain using SSSD and Realmd. There are a few different methods out there on how to do this but from what I’ve tested and researched, using SSSD and Realmd is the most up to date and easiest way to achieve the desired result at the time of writing this. I’ve included links to all of the relevant documentation that I used in researching putting together this guide.

I just want to say off the bat, that I’m no Linux expert. I’ve only recently started to dabble with Linux. I wanted to see if this could be done so I tried it out in my test lab. I created this guide for myself so that I could use it again later when I no doubt forget how I originally done it in the first place. I couldn’t really find an up to date step by step guide to join Ubuntu Workstation to a Windows Domain that was easy to follow for beginners so I’m putting this up on my site in the hope that it may help someone else. If you see any glaringly obvious mistakes or if there is a better way of doing something let me know in the comments. This isn’t really Ubuntu specific as a lot of the steps from this guide have been adapted from the Redhat and Fedora documentation. If you are here following this guide, I’d say try it out in a test environment first to make sure it does everything that you need.

So in my test lab I went through and tested few different methods on how to go about joining a Ubuntu 16.04 computer to a Windows Domain. The different methods I tried were: -

- Winbind

- SSSD

- RealmD & SSSD

As I said earlier, I found that for a new linux user, the RealmD & SSSD method to Join Ubuntu Workstation to a Windows Domain, was the easiest and most effective. Your mileage may vary.

I’ll split this guide up in to separate sections.

- Configuring the hosts file.

- Setting up the resolv.conf file.

- Setting up NTP.

- Installing the required packages.

- Configuring the Realmd.conf file.

- Fixing a bug with the packagekit package.

- Joining the Active Directory Domain.

- Configuring the SSSD.conf file.

- Locking down which Domain Users can login.

- Granting Sudo access.

- Configuring home directories.

- Configuring LightDM.

- Final Thoughts & Failures

- Links

1. Configuring the hosts file

To update the hosts file edit the /etc/hosts file. On my workstation, by default the fully qualified domain name wasn’t in the hosts file so I had to add it. Note: Coming from Windows I’d never seen a 127.0.1.1 address used as a loopback address. Seems legit though.

In this example the hostname of the workstation I want to join to the domain is ubutest01.

|

1 |

sudo vi /etc/hosts |

Set the 127.0.1.1 address to your new hostname in the following format.

127.0.1.1 ubutest01.bce.com ubutest01

Reboot the system for the changes to take effect.

|

1 |

sudo reboot |

To test if the name has been changed:

|

1 |

hostname |

2. Setting up the resolv.conf file

Make sure you’re Ubuntu computer can talk to your DNS Servers. By default, the resolv.conf will be set like the following:

To change it to have the actual DNS servers that you are using do the following:

|

1 |

sudo vi /etc/Networkmanager/NetworkManager.conf |

Comment out the dns=dnsmasq line.

#dns=dnsmasq

Then restart the network manager.

|

1 |

sudo systemctl restart network-manager.service |

If you have set the dns servers via the GUI you should then see them in the resolv.conf file.

|

1 |

cat /etc/resolv.conf |

Check that you can resolve the SRV records for the domain by running the following:

|

1 |

dig –t SRV _ldap._tcp.bce.com | grep –A2 "ANSWER SECTION" |

3. Setting up NTP

It’s important to synchronize time with your Domain Controllers so Kerberos works correctly. Install NTP.

|

1 |

sudo apt -y install ntp |

Edit the vi ntp.conf file.

|

1 |

sudo vi /etc/ntp.conf |

Comment out the ubuntu servers and put your own dc’s in there. For example: -

server dc.bce.com iburst prefer

Restart the ntp service.

|

1 |

sudo systemctl restart ntp.service |

Then to check if it’s working try running:

|

1 |

ntpq –p |

During this process I found this little tip. This is a handy tool to make sure your syncing correctly:

|

1 |

sudo apt -y install ntpstat |

Then run:

|

1 |

ntpstat |

Should be syncing like a boss.

4. Installing the required packages.

Install the necessary packages:

|

1 |

sudo apt -y install realmd sssd adcli libwbclient-sssd krb5-user sssd-tools samba-common packagekit samba-common-bin samba-libs |

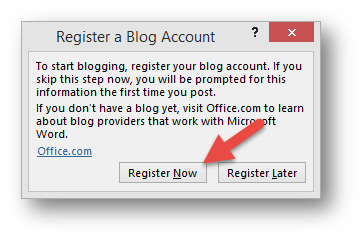

If you are presented with the following screen, put the domain name in CAPITALS.

5. Configuring the Realmd.conf file

Make the following changes to the realmd.conf file before using realmd to join the domain. This will make domain users have their home directory in the format /home/user. By default it will be /home/domain/user. You might want it like this, I do not. If you want to read more about these options you can do that here.

Note: If you are going to have your domain users not use fully-qualified domain names, then you may run in to issues if you have a local linux user with the same account name as the active directory account name.

|

1 |

sudo vi /etc/realmd.conf |

[active-directory]

os-name = Ubuntu Linux

os-version = 16.04

[service]

automatic-install = yes

[users]

default-home = /home/%u

default-shell = /bin/bash

[bce.com]

user-principal = yes

fully-qualified-names = no

6. Fix a bug with the Packagekit package.

There is a bug with the packagekit package in Ubuntu 16.04. You will need to do this as a workaround otherwise it will hang when you try to join the domain.

Note: I had to this when I originally wrote this guide in May of 2016. This may have been fixed by the time you are reading this. I thought I’d put it in just in case.

|

1 2 3 4 5 |

sudo add-apt-repository ppa:xtrusia/packagekit-fix sudo apt update sudo apt upgrade packagekit |

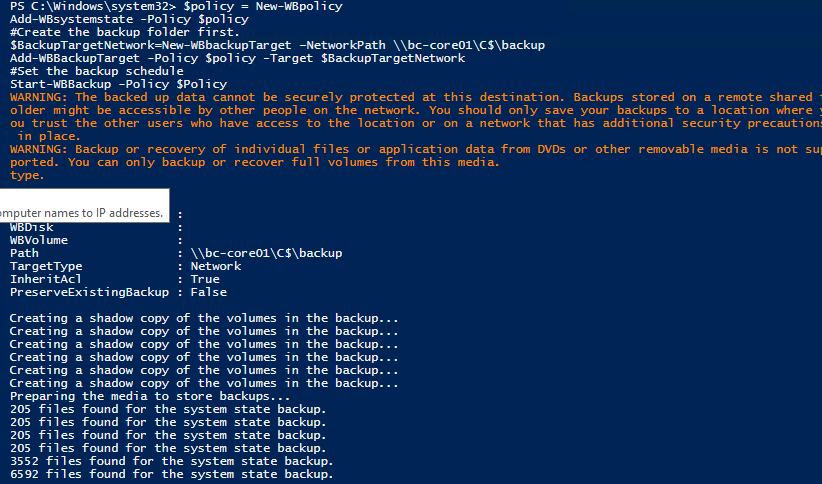

7. Join Ubuntu Workstation to a Windows Domain.

Now, it’s time to join the domain. Check that realm can discover the domain you will be joining.

|

1 |

realm discover |

Create the kerberos ticket that will be used the domain user that has privileges to join the domain.

|

1 |

kinit -V a_craig |

Now you can join the domain using realmd.

|

1 |

sudo realm --verbose join -U a_craig bce.com |

To do a quick test to see if it’s worked:

|

1 |

id craig |

This is all the Domain Groups that the domain user Craig belongs to. It’s worked HUZZAH!

OK, now that’s done. Lets tweak!

8. Configuring the SSSD.conf file.

I’d like to enable Dynamic DNS and some other features that I couldn’t set via the realmd.conf file. We now have the opportunity to tweak these settings in the sssd.conf file. I’ve added the following:

|

1 |

sudo vi /etc/sssd/sssd.conf |

auth_provider = ad

chpass_provider = ad

access_provider = ad

ldap_schema = ad

dyndns_update = true

dyndsn_refresh_interval = 43200

dyndns_update_ptr = true

dyndns_ttl = 3600

You can find a full list of options to tweak at the sssd.conf man page.

9. Locking down which Domain Users can login.

Now, let’s restrict which domain users can login.

|

1 |

sudo realm deny -R bce.com -a |

I want users specified in a specific group to be able to login, as well as the domain admins.

|

1 |

sudo realm permit -R bce.com -g linuxadmins domain\ admins |

10. Granting Sudo Access.

Now lets grant some sudo access.

|

1 |

sudo visudo |

11. Configuring home directories.

Lets setup the home directory for domain users logging in.

|

1 |

sudo vi /etc/pam.d/common-session |

Add to the bottom:

session required pam_mkhomedir.so skel=/etc/skel/ umask=0022

12. Configure Lightdm

The last thing I want to do is edit the lightdm conf file so that I can log in with a domain user at the login prompt.

|

1 |

sudo vi /etc/lightdm/lightdm.conf |

[SeatDefaults]

allow-guest=false

greeter-show-manual-login=true

I think that’s all the tweaking I’m going to do. I’m going to reboot and see if I can login.

|

1 |

sudo reboot |

Once the login screen pops up you should be able to manually login. Click login.

I can log in. Huzzah!

13. Final Thoughts & Failures

This was a fun process and I learned a lot about Ubuntu and Linux in creating this guide. There were a few failures however so it wasn’t all smooth sailing.

Dynamic DNS

So after all that, I still had issues with Dynamic DNS. I researched this as much as I could but couldn’t find a resolution. I manually added the A records on my DNS server but I’d really like to get Dynamic DNS working. If anyone knows where I have gone wrong or can point out how to get this working please leave a comment.

SAMBA File Sharing

I also had some issues after this with getting SAMBA/CIFS File sharing working with Windows Authentication. I would like to be able to share a folder in Ubuntu to Windows Users and have the Windows Users authtencticate to the Ubuntu share with their Windows credentials. I’ve spent a fair bit of time trying to find a resolution to this and played a bit with ACLS in Ubuntu as well but couldn’t get it working properly. I put this down to being fairly new to Linux and not fully understanding some of the intricacies with SAMBA and Linux authentication. If anyone can point me in the right direction for getting SAMBA File Sharing working please leave me a comment.

14. Links

Below are the links that I used when researching this guide.

SSSD-AD Man Page

http://linux.die.net/man/5/sssd-ad

SSSD.Conf Man Page

http://linux.die.net/man/5/sssd.conf

SSSD-KRB5 Man Page

http://linux.die.net/man/5/sssd-krb5

SSSD-SIMPLE Man Page

http://linux.die.net/man/5/sssd-simple

PAM_SSS Module Man Page

http://linux.die.net/man/8/pam_sss

SSSD - Fedora

https://fedorahosted.org/sssd/

Redhat - Ways to Integrate Active Directory and Linux Environments

https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Windows_Integration_Guide/introduction.html

Redhat - Using Realmd to Connect to an Active Directory Domain

https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Windows_Integration_Guide/ch-Configuring_Authentication.html

Realm Man Page

http://manpages.ubuntu.com/manpages/trusty/man8/realm.8.html

Realmd.conf Man Page

http://manpages.ubuntu.com/manpages/trusty/man5/realmd.conf.5.html

Correcting DNS issue by editing Resolv.Conf file

http://askubuntu.com/questions/201603/should-i-edit-my-resolv-conf-file-to-fix-wrong-dns-problem